Law School Runs Mock Trial Before Jury Of AI Chatbots As Dystopian Nightmare Accelerates

Sci-Fi Author: In my book, I invented the Torment Nexus as a cautionary tale.

Tech Company: At long last, we have created the Torment Nexus from classic sci-fi novel Don’t Create The Torment Nexus.

So goes one of the truly perfect social media posts of our era. Last week, law students at the University of North Carolina School of Law had an opportunity to try out the legal profession’s equivalent of the Torment Nexus with a mock trial placing AI chatbots — specifically ChatGPT, Grok, and Claude — in the role of jurors deciding the fate of an accused defendant. For all the faults of the modern jury system, should we replace it with an algorithm prepared at the behest of a moron who wants to rewrite history to make sure his personal bot injects an aside about “white genocide” into every recipe request?

As it turns out, the answer is still no. At least as a 1-to-1 replacement.

The experiment centered on a mock robbery case pursuant to the make-believe “AI Criminal Justice Act of 2035.” Under the watchful eye of Professor Joseph Kennedy, serving as the judge, law students put on the case of Henry Justus, an African American high school senior charged with robbery. The bots received a real-time transcript of the proceedings and then, like an unholy episode of Judge Judy: The Singularity Edition, the algorithmic jurors deliberated.

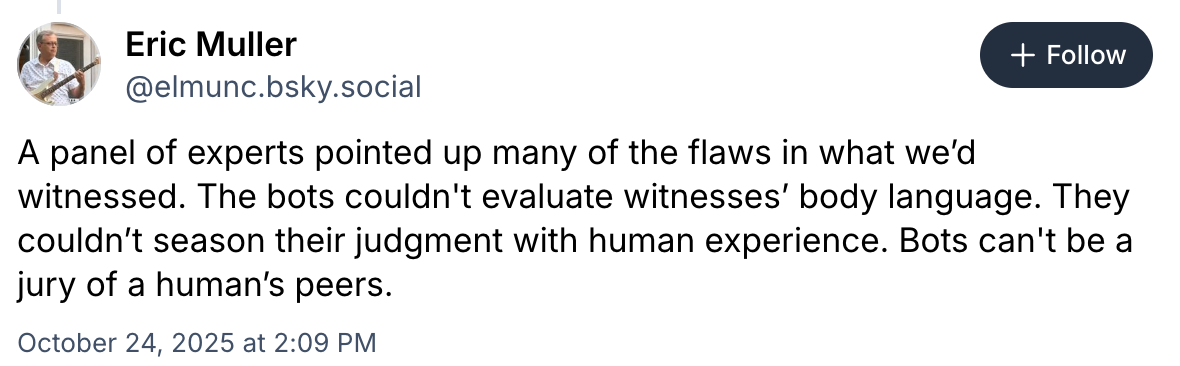

Professor Eric Muller left with some concerns.

The idea that robots can cure the justice system’s bias — and save the government $15/day per juror in the process — is the sort of Silicon Valley pipe dream that generates another round of funding to be heaped on the capex fire. Venture Capitalists and tech bros may market on “disrupting empathy” or whatever, but we’re just swapping one bias for another: human for algorithmic, emotional for opaque, personal for corporate. So far, the robots as a whole have proven efficient vectors of implicit bias, taking the unconscious biases of their designers and the training data they’re given and spitting it back with a deceptive coat of false neutrality.

Except Grok, of course, which is constantly being tinkered with to better exhibit explicit and very conscious bias.

And there’s this:

Since it’s Claude, I assume it got to “Ladies and Gentlemen of the–” and just threw up a “message will exceed the length limit” warning.

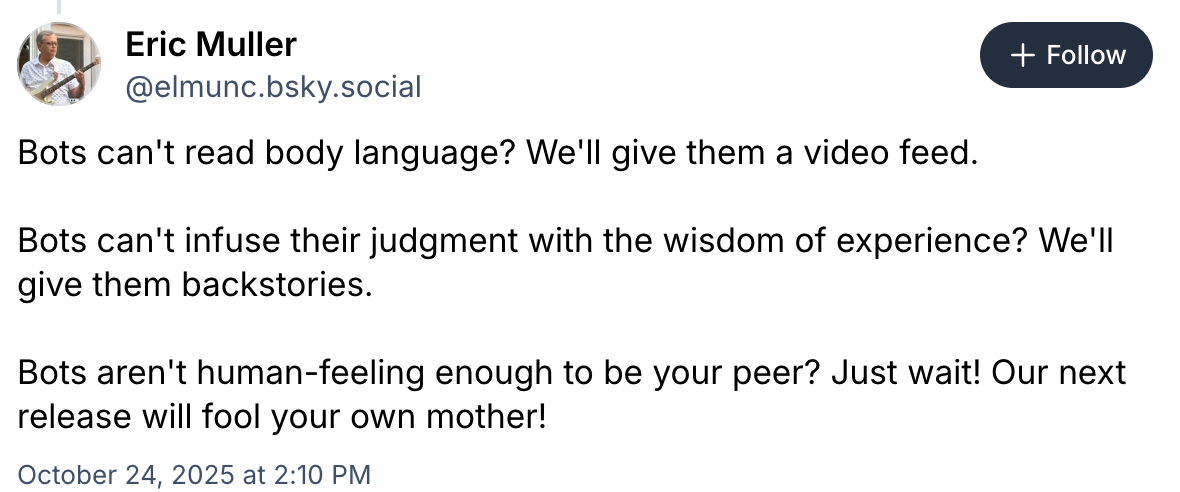

But a machine can’t tell if a witness is lying based on their conduct, because it can’t perceive that conduct. It can only tell if there’s an outright contradiction of a definite fact. Maybe it could conjure up some approximation of “doubt” if a witness exhibits inconsistent sentence structure or something, and that’s fine if you think the difference between an innocent man and a sociopath should hang on their grasp of Strunk & White. Which, honestly… fair. Muller’s deeper concern though, is that the tech industry’s improvement death drive will take all the present drawbacks and patch the symptoms without acknowledging the disease.

The thing with AI — aside from its inherently rickety funding model — is that (a) it’s very good at the tasks it’s good at, and (b) almost everyone pretends it’s good at the tasks it’s not good at. Can AI replace human jurors? No. No matter how bad human jurors are, a sycophantic calculator playing “word roulette” is not better.

That’s not to say there isn’t a role for AI in the jury process. It goes without saying that civil litigation offers much lower stakes than criminal cases and a panel of robots might provide an opportunity to direct limited juror resources toward the criminal cases that matter more. Even within the criminal context, there could be — with responsible design and regulation beyond what we have right now — a role for AI in allowing jurors to query the evidence to avoid missing key answers buried in pages and pages of transcripts without the benefit of a verbatim search query. Or assisting jurors in visualizing the points of disagreement between the parties.

Just because AI isn’t in a position to replace humans in the box doesn’t mean it has nothing to offer though. We just need to keep experimenting… in mock trials only.

Joe Patrice is a senior editor at Above the Law and co-host of Thinking Like A Lawyer. Feel free to email any tips, questions, or comments. Follow him on Twitter or Bluesky if you’re interested in law, politics, and a healthy dose of college sports news. Joe also serves as a Managing Director at RPN Executive Search.

Joe Patrice is a senior editor at Above the Law and co-host of Thinking Like A Lawyer. Feel free to email any tips, questions, or comments. Follow him on Twitter or Bluesky if you’re interested in law, politics, and a healthy dose of college sports news. Joe also serves as a Managing Director at RPN Executive Search.